Our understanding of how the brain and AI (Artificial Intelligence) work has advanced considerably.

The best model of the human brain we have today dates from the 1970s and is based on neural networks. For each characteristic, a knowledge or emotion is associated. We reconstruct concepts whenever we need them. Our memories are linked to each other by common characteristics. The brain reuses features. It does not re-record common elements. What we remember is a reconstruction. Eventually, the brain eliminates concepts that are not often called upon. This happens at night, during REM (rapid eye movement), when the eyes make rapid movements. For example, if you’ve been bitten by a dog, you’ll forget less and associate more dangerous characteristics with the dog.

To see in front of us, the back of the eye is black. We have a black disc caused by the optic nerve at the back of each eye. We can’t see what’s in front of us without the brain, which reconstructs the visual environment from the periphery. We can deceive the eye with optical illusions.

This new understanding makes it possible to treat post-traumatic disorders with EMDR(Eye Movement Desensitization and Reprocessing). It reuses this phase of sleep to rebalance the neural network. For example, war veterans are taught that a loud noise is not necessarily associated with an explosive.

In the early 2010s, scientists set out to model how the brain works. The Human Brain Project set out to create a program that would reproduce brain function on a computer. At first, this model couldn’t do much. Fei-fei Li, from Stanford University, observed that a baby doesn’t know much, but only learns after exposure to its environment. The researchers then exposed the program to videos, images, sounds and text.

How we teach computers to understand pictures 2015 – Fei Fei Li – Ted: https://youtu.be/40riCqvRoMs?si=FLj3q0q4yjGhMrDG

Each piece of content is associated with an emotion and a degree of strength to mimic a child’s learning. e.g.: funny, very funny. The Human Brain Project learned as well as a human child. For example, it recognizes a cat.

Facebook, around 2015-2016, created two neural networks. Facebook had access to a lot of content for training. They made the two networks communicate. These networks talked to each other. Facebook stopped the experiment because the two networks had created a new language that humans didn’t understand.

Sam Altman has shown that these networks have a real talent for languages, and has developed his own text-only Neural Network: Chat GPT. It’s called LLM (large language model). At the same time, Sam Altma’s company, Open AI, developed another neural network trained solely with images associated with text: DALL-E. It’s called Clip (contrastive language-image pretraining). DALL-E uses Chap GPT to give context to images.

In 2022, Chap GPT finished training DALL-E and Chap GPT and made them available to the public. These AIs are generative artificial intelligences: they are capable of understanding an image or text and expressing themselves on demand. For example, they can write a 5-line poem about spring, or draw a daisy like Van Gogh. For this purpose, DALL-E and Chap GPT are extremely powerful and have met with enormous success. Researchers have developed applications for these AIs: recognizing a weed in a field and pulling it out mechanically, recognizing a cancerous tumor on a lung X-ray, being able to generate descriptions for the visually impaired, and so on. LLMs (large language models) are capable of improving a text. They are extremely efficient for translating everyday documents. They are replacing many workers who used to translate for a living.

But because AI speaks so well, humans have the impression that it knows. Some use it as a personal assistant or as an oracle. But these artificial intelligences work on form, not content. They don’t reason, they don’t tell the truth.

Big companies like Microsoft or Google, who make Chat GPT or one of its clones available, wanted to capitalize on people’s expectations. They’ve disguised the LLM so that their expression looks like reasoning. For example, showing the 5 best restaurants in town or giving advice for relationships.

The operation of neural networks, generative AI, is extremely energy-intensive. The processors that run neural networks aren’t built for it. These processors test all eventualities until they find the right one. In the pilot phase of Google’s AI with a limited number of users, Google’s power consumption increased by 48%. Generating an image with DALL-E consumes as much electricity as it takes to charge a smartphone. Generating a page of text with Chat GPT emits as much CO2 as 6 km by car. In December 2023, there were 10 million daily users of Chat GPT. For every twenty texts requested from Chat GPT, half a liter of clean water is consumed to cool the data centers.

Générer une image consomme autant d’énergie que pour charger un smartphone (et autres chiffres affolants sur les IA) – Usbek & Rica: https://usbeketrica.com/fr/article/generer-une-image-consomme-autant-d-energie-que-pour-charger-un-smartphone-et-autres-chiffres-affolants-sur-les-ia

To train its neural networks, chat GPT made extensive use of employees in Kenya who were underpaid ($2 a day), mistreated and had no social security. Many of these employees are traumatized by some shocking images.

‘It’s destroyed me completely’: Kenyan moderators decry toll of training of AI models – The Guardian: https://www.theguardian.com/technology/2023/aug/02/ai-chatbot-training-human-toll-content-moderator-meta-openai

Manufacturers are running massive communication campaigns to show that AI can improve health, agriculture, climate change and construction. For example, Google’s Alphafold has made it possible to understand millions of proteins, whereas the entire scientific community used to be able to understand a maximum of two per year. This is being used to create medicines or understand how pollutants affect the human body. Another example: robots that water or apply pesticides only where necessary. In these cases, AI will benefit humanity.

Google DeepMind’s Latest AI Model Is Poised to Revolutionize Drug Discovery – Time: https://time.com/6975934/google-deepmind-alphafold-3-ai/

On the other hand, if we use it for entertainment, to make people laugh or to replace office work, we’re going to make the climate crisis worse, as it would generate tons of extra CO2. Using artificial intelligence to listen an entire conversation in a videoconference and summarize the discussions has a major impact on the environment. There is a human cost and an environmental cost.

On the Unsustainability of ChatGPT: Impact of Large Language Models on the Sustainable Development Goals – UNU Macau: https://unu.edu/macau/blog-post/unsustainability-chatgpt-impact-large-language-models-sustainable-development-goals

We need to evolve our use of AI with public research into microprocessors so that they consume less power. Only one company in Taiwan, TSMC, produces microprocessors that meet the demands of Open AI, and they are not efficient enough.

Encourage research, science and education to find more efficient mathematical models is needed. China made a major effort to educate part of its population over ten years ago. A Chinese researcher has invented an LLM (large language model) that requires a hundred times less energy: Deep seek .

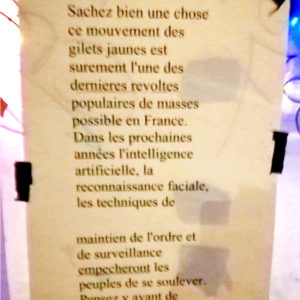

Legislation needs to evolve to prevent these artificial intelligences from being trained by quasi-slaves, and to avoid our personal data ending up in the content generated by these AIs.

Replacing a human with an AI just creates more CO2. Humans will continue to exist, but without work.

Let’s use Artificial Intelligence, but with relevance.

Strategies for mitigating the global energy and carbon impact of artificial intelligence – SDG: https://sdgs.un.org/sites/default/files/2023-05/B29%20-%20Xue%20-%20Strategies%20for%20mitigating%20the%20global%20energy%20and%20carbon%20impact%20of%20AI.pdf

Translated with DeepL.com (free version)